Okay, so, to repeat the initial test I did to get a feel for how the new repository format behaves…

I just now ran these three commands:

restic_v2 init --repository-version latest --compression off -r /Volumes/Fortress_L3/test/test_off

restic_v2 init --repository-version latest --compression auto -r /Volumes/Fortress_L3/test/test_auto

restic_v2 init --repository-version latest --compression max -r /Volumes/Fortress_L3/test/test_max

I then copied my ~/Library/Log folder to “Log copy” for a static test, as I assume it would be fairly compressible, and ran the following three commands:

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_off

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_auto

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_max

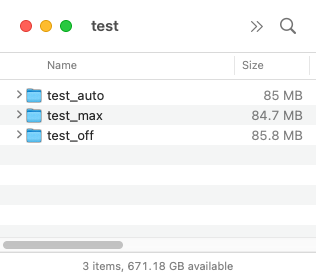

Then I run du and get the following:

du -d 1 /Volumes/Backup | rg test

238336 /Volumes/Fortress_L3/test/test_off

236800 /Volumes/Fortress_L3/test/test_auto

235776 /Volumes/Fortress_L3/test/test_max

SOMETHING is going on, though considering what you just said, I have no idea what that something is haha

So with that in mind I ran:

restic_v2 init --repository-version latest -r /Volumes/Fortress_L3/test/test_off2

restic_v2 init --repository-version latest -r /Volumes/Fortress_L3/test/test_auto2

restic_v2 init --repository-version latest -r /Volumes/Fortress_L3/test/test_max2

And then this time ran:

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_off2 --compression off

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_auto2 --compression auto

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_max2 --compression max

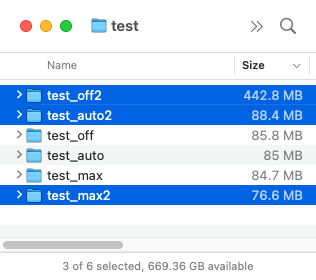

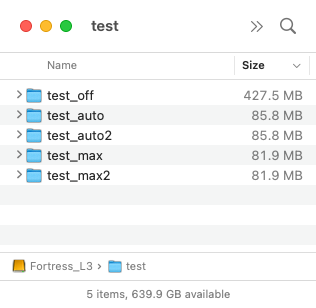

And yes there’s now a much more significant difference!

du -d 1 . | rg test | sort -r

943360 ./test_off2

243200 ./test_auto2

238336 ./test_off

236800 ./test_auto

235776 ./test_max

219648 ./test_max2

So I guess now the question is, what’s with the random size fluctuations between what should have all been essentially “auto” repositories in the first test batch? Is restic using Zstd with something like zstd --adapt where the compression level changes on the fly, thus every run could be different? This is a copy of my log folder, so the source is static. But yes, this test is what initially fooled me into thinking that --compression was taken into consideration by init.

That said, I think it would be handy if you COULD specify a “default compression method” with init.

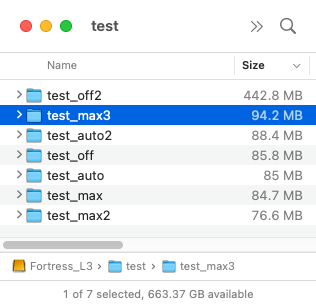

Okay SOMETHING very strange is going on, because I ran a third max test to see if the size would remain the same at least between max runs, but…

restic_v2 init --repository-version latest -r /Volumes/Fortress_L3/test/test_max3

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_max3 --compression max

du -d 1 . | rg test | sort -r

943360 ./test_off2

254976 ./test_max3

243200 ./test_auto2

238336 ./test_off

236800 ./test_auto

235776 ./test_max

219648 ./test_max2

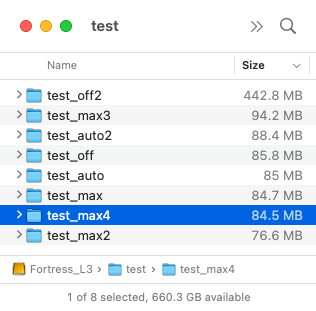

Okay, one more max run just to see…

restic_v2 init --repository-version latest -r /Volumes/Fortress_L3/test/test_max4

restic_v2 backup /Users/akrabu/Library/Logs\ copy -r /Volumes/Fortress_L3/test/test_max4 --compression max

du -d 1 . | rg test | sort -r

943360 ./test_off2

254976 ./test_max3

243200 ./test_auto2

238336 ./test_off

236800 ./test_auto

235776 ./test_max

235264 ./test_max4

219648 ./test_max2

Yeahhhh, this is just all over the place. Did I find the first bug??