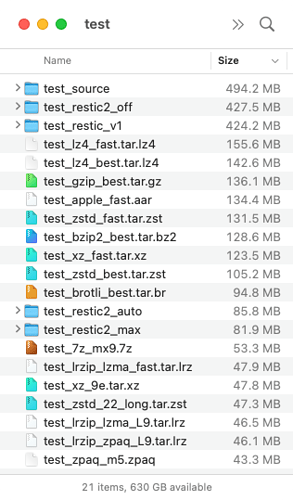

So this is pretty neat! I copied my ~/Library/Logs folder out, backed up with restic_v1, then restic_v2 using --compress off / auto / max. I then also compressed the folder with a few standard archivers to compare with the restic repositories. About the only thing that beats restic is 7-zip -mx=9, lrzip -L 9, xz -9, zstd --22 --ultra --long, and zpaq -m5 - so just the highest levels of LZMA2, Zstandard, and ZPAQ. And they all took waayyyy longer than restic did!

8 Likes