Okay, the testing has finally completed.

So, to recap, I made two 20GB random files. The first one I synced with B2 using restic’s native backend. The second one I attempted to sync using the Rclone:B2 backend. The latter did not go well. Both backup and check --read-data using the native backend worked flawlessly. Not a single error. I attempted backup twice with the Rclone:B2 backend, with both ending in fatal errors. (Meanwhile, yes, had I been using pCloud, it would have allowed the backup to continue, saving partial files, and corrupting the database.)

After the first failed Rclone:B2 backup, I ran a check --read-data using both backends. Here’s the native backend:

using temporary cache in /var/folders/lj/z4b97q0n1wb64qrrb0rnnrbw0000gn/T/restic-check-cache-822438630

repository adfc72e8 opened successfully, password is correct

created new cache in /var/folders/lj/z4b97q0n1wb64qrrb0rnnrbw0000gn/T/restic-check-cache-822438630

create exclusive lock for repository

load indexes

check all packs

pack c934a697: not referenced in any index

pack 55297fe7: not referenced in any index

pack 48ddc81d: not referenced in any index

pack d0e4cf3c: not referenced in any index

pack 0d4777e1: not referenced in any index

pack d5cb63fd: not referenced in any index

pack 801484c8: not referenced in any index

pack edd3286d: not referenced in any index

8 additional files were found in the repo, which likely contain duplicate data.

You can run `restic prune` to correct this.

check snapshots, trees and blobs

no errors were found

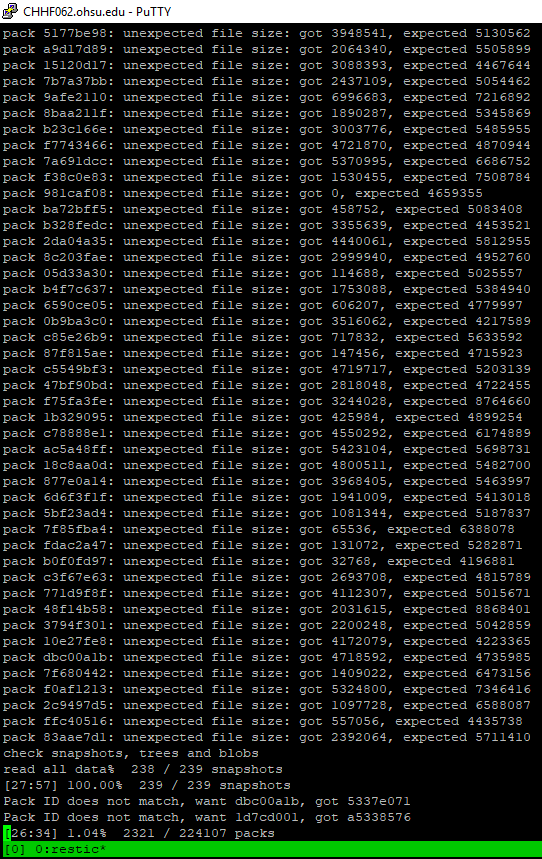

Here’s the check --read-data using the Rclone:B2 backend:

using temporary cache in /var/folders/lj/z4b97q0n1wb64qrrb0rnnrbw0000gn/T/restic-check-cache-398257619

repository adfc72e8 opened successfully, password is correct

created new cache in /var/folders/lj/z4b97q0n1wb64qrrb0rnnrbw0000gn/T/restic-check-cache-398257619

create exclusive lock for repository

load indexes

check all packs

pack 801484c8: not referenced in any index

pack c934a697: not referenced in any index

pack 55297fe7: not referenced in any index

pack 0d4777e1: not referenced in any index

pack edd3286d: not referenced in any index

pack d5cb63fd: not referenced in any index

pack d0e4cf3c: not referenced in any index

pack 48ddc81d: not referenced in any index

8 additional files were found in the repo, which likely contain duplicate data.

You can run `restic prune` to correct this.

check snapshots, trees and blobs

read all data 0 / 1 snapshots

[0:00] 100.00% 1 / 1 snapshots

rclone: 2021/02/27 09:37:28 ERROR : data/28/2821f792815356b6ee703237e7348505b63e42a605a1c06fc7aad81f18ef552c: Didn't finish writing GET request (wrote 6335700/7306072 bytes): unexpected EOF

Load(<data/2821f79281>, 0, 0) returned error, retrying after 720.254544ms: unexpected EOF

[1:37] 1.31% 49 / 3747 packs

rclone: 2021/02/27 10:08:42 ERROR : data/bf/bf46c9e552224b1853af5ee4acc8d9cb24b3e27c7b9cb3f0487d12b3ba467cc8: Didn't finish writing GET request (wrote 4019540/7499688 bytes): read tcp 10.236.50.252:62214->206.190.215.16:443: read: operation timed out

Load(<data/bf46c9e552>, 0, 0) returned error, retrying after 582.280027ms: unexpected EOF

rclone: 2021/02/27 10:08:42 ERROR : data/80/80ad89758519457db65770bf592216c03d2aa3e3027ddc1dabfd09f2dc920746: Didn't finish writing GET request (wrote 992165/8014898 bytes): read tcp 10.236.50.252:62271->206.190.215.16:443: read: operation timed out

Load(<data/80ad897585>, 0, 0) returned error, retrying after 468.857094ms: unexpected EOF

rclone: 2021/02/27 10:08:43 ERROR : data/86/86ce8b02adb81b29c028a6cbc7e597aefcb1eb5620d3c26abdff41bb6d23af25: Didn't finish writing GET request (wrote 4367141/4940815 bytes): read tcp 10.236.50.252:62257->206.190.215.16:443: read: operation timed out

Load(<data/86ce8b02ad>, 0, 0) returned error, retrying after 462.318748ms: unexpected EOF

rclone: 2021/02/27 10:08:43 ERROR : data/4d/4db081ec4ae9695558e332e29e1fd7c4d6eb0e2f744b18f1ac3867b4d1340895: Didn't finish writing GET request (wrote 2431316/5142380 bytes): read tcp 10.236.50.252:62133->206.190.215.16:443: read: operation timed out

Load(<data/4db081ec4a>, 0, 0) returned error, retrying after 593.411537ms: unexpected EOF

rclone: 2021/02/27 10:08:43 ERROR : data/97/975d0fb57f341bef9390912bf65a84fa4e9d1f1fe7b479381bd6cab749fa2a74: Didn't finish writing GET request (wrote 6683301/8892480 bytes): read tcp 10.236.50.252:62248->206.190.215.16:443: read: operation timed out

Load(<data/975d0fb57f>, 0, 0) returned error, retrying after 282.818509ms: unexpected EOF

[40:36] 34.59% 1296 / 3747 packs

rclone: 2021/02/27 10:17:35 ERROR : data/d5/d51e730af8a7bd398101d365bc89bd5afc80cc1925eb2d23d70ba5f04f9dd5a9: Didn't finish writing GET request (wrote 3821012/4424979 bytes): unexpected EOF

Load(<data/d51e730af8>, 0, 0) returned error, retrying after 328.259627ms: unexpected EOF

rclone: 2021/02/27 10:26:52 ERROR : data/08/08e7f5f7a88e0777ebc851feb5ca44c757172c3e1ebe528e3b44cae8423160dc: Didn't finish writing GET request (wrote 2960724/4559876 bytes): unexpected EOF

Load(<data/08e7f5f7a8>, 0, 0) returned error, retrying after 298.484759ms: unexpected EOF

rclone: 2021/02/27 10:38:10 ERROR : data/e7/e7cdf9f4e8105e2572dc44cfd7445a524a5ec589f4918e23f90d0962d91405e7: Didn't finish writing GET request (wrote 1835732/5096181 bytes): unexpected EOF

Load(<data/e7cdf9f4e8>, 0, 0) returned error, retrying after 400.45593ms: unexpected EOF

rclone: 2021/02/27 10:39:17 ERROR : data/c2/c227101a7905c41349af53cd9cbc867a9e04678b96ce77e46266f820a8f98317: Didn't finish writing GET request (wrote 4946004/5872615 bytes): unexpected EOF

Load(<data/c227101a79>, 0, 0) returned error, retrying after 507.606314ms: unexpected EOF

rclone: 2021/02/27 10:42:54 ERROR : data/3e/3e415ff48de57a54437aa9d827d0d898ef4dd21ee55db1d094f830bd2b755475: Didn't finish writing GET request (wrote 3010533/4694518 bytes): unexpected EOF

Load(<data/3e415ff48d>, 0, 0) returned error, retrying after 656.819981ms: unexpected EOF

rclone: 2021/02/27 11:08:25 ERROR : data/8c/8cba80e4c70b44931291f4d2884cde268f67c1b5343e7705cfaf6265060bb134: Didn't finish writing GET request (wrote 942356/4618959 bytes): unexpected EOF

Load(<data/8cba80e4c7>, 0, 0) returned error, retrying after 357.131936ms: unexpected EOF

[1:50:43] 100.00% 3747 / 3747 packs

no errors were found

Afterward, I tried once more to backup using the Rclone:B2 backend. The second attempt failed, as well.

repository adfc72e8 opened successfully, password is correct

no parent snapshot found, will read all files

rclone: 2021/02/27 13:17:55 ERROR : data/53/53cf89ca022d0c9cb12b22b36c522229879c0b11808b407771a045816dc36457: Post request put error: Post "https://pod-000-1148-00.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001148_t0020": EOF

rclone: 2021/02/27 13:17:55 ERROR : data/bc/bcd082248181b27b02bda634c8cad3182bb2eef61a635997a7a01a06d883fe8c: Post request put error: Post "https://pod-000-1147-00.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001147_t0004": EOF

rclone: 2021/02/27 13:17:55 ERROR : data/53/53cf89ca022d0c9cb12b22b36c522229879c0b11808b407771a045816dc36457: Post request rcat error: Post "https://pod-000-1148-00.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001148_t0020": EOF

rclone: 2021/02/27 13:17:55 ERROR : data/ee/ee341443b62b9d15a9ae724304a5e008dda23cf81506849b36393ab4f672207c: Post request put error: Post "https://pod-000-1124-05.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001124_t0052": EOF

rclone: 2021/02/27 13:17:55 ERROR : data/bc/bcd082248181b27b02bda634c8cad3182bb2eef61a635997a7a01a06d883fe8c: Post request rcat error: Post "https://pod-000-1147-00.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001147_t0004": EOF

rclone: 2021/02/27 13:17:55 ERROR : data/ee/ee341443b62b9d15a9ae724304a5e008dda23cf81506849b36393ab4f672207c: Post request rcat error: Post "https://pod-000-1124-05.backblaze.com/b2api/v1/b2_upload_file/26a903e281e1323b6fb5081c/c002_v0001124_t0052": EOF

Save(<data/53cf89ca02>) returned error, retrying after 720.254544ms: server response unexpected: 500 Internal Server Error (500)

Save(<data/bcd0822481>) returned error, retrying after 582.280027ms: server response unexpected: 500 Internal Server Error (500)

Save(<data/ee341443b6>) returned error, retrying after 468.857094ms: server response unexpected: 500 Internal Server Error (500)

#truncated

Fatal: unable to save snapshot: server response unexpected: 500 Internal Server Error (500)

Full log: https://f002.backblazeb2.com/file/akrabu-365/restic-rclone-b2-backup.txt

Then I decided to use the native backend to backup the same, second random file.

repository adfc72e8 opened successfully, password is correct

no parent snapshot found, will read all files

[13:15] 2.84% 0 files 541.144 MiB, total 1 files 18.626 GiB, 0 errors ETA 7:33:46

Files: 1 new, 0 changed, 0 unmodified

Dirs: 4 new, 0 changed, 0 unmodified

Added to the repo: 18.569 GiB

processed 1 files, 18.626 GiB in 9:15:57

snapshot 6133b403 saved

Not a single hiccup or error. Lastly, I then used rclone by itself to copy the file:

rclone -P --stats-one-line copy /Users/akrabu/Desktop/test2/restic-rclone b2:akrabu-014

18.626G / 18.626 GBytes, 100%, 919.227 kBytes/s, ETA 0s

Not a single hiccup with Rclone alone, either - even though it took the same 9 hours to upload, over the same connection, to the same backend. I verified the checksum, as well.

So, I think the problem is two-fold. One, pCloud is prone to incomplete uploads as @cdhowie and @MichaelEischer pointed out. A backend like B2 will at least cause the backup to fail, instead of leading to a saved, corrupted snapshot as pCloud does. Two, something is going on with using Rclone as a backend with Restic. It seems much more unstable than either Restic or Rclone alone.

I think the moral of the story for me is, I’m going to need to switch back to a native backend. I wish Restic supported WebDAV, I’d be curious to see how pCloud would be handled natively instead.