Hello,

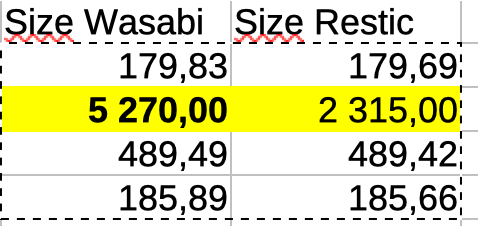

I’m using restic since a while and has setup some bash scripts to do backups. Lastly I discovered we were paying for 6TB @ Wasabi.

I ask Wasabi how could it be that on 4 buckets only one had a size bigger on one side :

On the restic size I’m using

$ restic -r $REPO stats --mode raw-data --no-cache

On thee Wasabi side I’m reading the size on their web interface.

Wasabi suppport sent me to their webpage where I found several methods (AWS CLI With Wasabi).

As I already had aws cli installed I used it and…

$ aws s3 ls --summarize --human-readable --recursive s3://BUCKET_NAME --endpoint-url=https://s3.wasabisys.com

gives

Total Objects: 996600

Total Size: 5.3 TiB

while Restic says

scanning…

Stats in raw-data mode:

Snapshots processed: 1527

Total Blob Count: 9155964

Total Size: 2.315 TiB

I ended up by piping the aws command and gettinng the sum which of course is the same as Wasabi is saying.

Is there any command to get the same list from restic ( see sample) in order to compare the two lists ?

Sample lines from aws :

2022-09-19 08:50:50 4.1 MiB data/ef/ef3298c90e94ef5d464cd99b0bd8170bbb9de3614373899ec3d080f3ad858780

2020-12-21 04:31:27 4.0 MiB data/ef/ef32a6a677d75e0ecbd8dc6a172c960b9e45485d8a402c1451a386214645806d

2020-07-07 11:21:45 4.4 MiB data/ef/ef32c67b7d698ba9b14925c8d5830ca3cc8aa204c9ffda60a56c402518e6b825

If needed I can provide the aws output.

Hi @Vartkat and welcome back to the restic community forum

Is Wasabi doing some retention policy on their end? I know that Backblaze also did that by default for new buckets, so new snapshots created an ever increasing bucket size even when pruning the repo to reduce sice.

I haven’t used Wasabi, but a 1min Google search showed some retention policy things by default. Might be worth a look.

Also: you selected “Development” as the category for this thread, but this seems more like a “Getting help” thing. Was this intentional or are you ok if we alter the category?

OK for altering the category. Their retention pilicy page says :

Wasabi has a minimum storage duration policy that means if stored objects are deleted before they have been stored with Wasabi for a certain number of days, a Timed Deleted Storage charge equal to the storage charge for the remaining days will apply. This policy is comparable to the minimum storage duration policies that exist with some AWS and other hyperscaler storage services.

With Wasabi minimum storage retention policy, minimum number of days are as follows:

90 days (default) for customers using Wasabi object storage pay-as-you-go (pay-go) pricing model.

my scripts are all doing a daily

$ restic forget --keep-last 48 --keep-hourly 24 --keep-daily 15 --keep-weekly 8 --keep-monthly 12 --keep-yearly 75 --prune

I’m very surprised that ended with a rolling quantity of 6TiB more knowing that the other three are announcing similar sizes on both sides (Wasabi vs restic) with the same script (others are linux machine backing up only vital dirs like /etc).

There’s three Macs with not so big disks and no so changing activity (no video editing, no image editing).

Seems like a repoinfo command could be handy here (which unfortunately is not in restic, see Add new command `repoinfo` by aawsome · Pull Request #2543 · restic/restic · GitHub)

To get repoinfo-like information: @Vartkat can you please post the output of restic prune --dry-run?

I already begun to tidy up the bucket, but as you can read the numbers don’t come near 6TiB

restic -r $REPO forget --tag Complete --no-cache --keep-last 1 --prune

.

.

.

[0:07] 100.00% 207 / 207 files deleted

207 snapshots have been removed, running prune

warning: running prune without a cache, this may be very slow!

loading indexes...

[32:09] 100.00% 45756 / 45756 index files loaded

loading all snapshots...

finding data that is still in use for 1204 snapshots

[16:48:40] 100.00% 1204 / 1204 snapshots

searching used packs...

collecting packs for deletion and repacking

[2:41] 100.00% 898842 / 898842 packs processed

to repack: 2971472 blobs / 335.509 GiB

this removes: 1838725 blobs / 300.699 GiB

to delete: 10951362 blobs / 3.469 TiB

total prune: 12790087 blobs / 3.763 TiB

remaining: 7032914 blobs / 1.542 TiB

unused size after prune: 90.195 GiB (5.71% of remaining size)

deleting unreferenced packs

[18:38] 100.00% 51604 / 51604 files deleted

repacking packs

[2:19:33] 100.00% 47776 / 47776 packs repacked

rebuilding index

[0:49] 100.00% 295853 / 295853 packs processed

deleting obsolete index files

[16:19] 100.00% 45756 / 45756 files deleted

removing 605132 old packs

[3:34:06] 100.00% 605132 / 605132 files deleted

done

@Vartkat For this repository, you actually use 3.763 + 1.542 = 5.305 TiB of storage which almost exactly fits the 5.3 TiB you are getting from your aws s3 ls command.

After successful pruning, it will be just 1.532 TiB. So I think the main reason was that you didn’t run a prune but removed snapshots using rustic forget. The removed snapshots no longer contributed to raw-data mode stats while the data was still present in your S3 bucket - until you finish the pruning…

The commands in my scripts are exactly the ones I showed i.e. restic forget --prune. Isn’t that supposed to be the same as running a forget followed by a prune ?

The exact line in my script is

$ restic forget --keep-last 48 --keep-hourly 24 --keep-daily 15 --keep-weekly 8 --keep-monthly 12 --keep-yearly 75 --prune

Just to make really sure we are on the same page: Wasabi’s retention policy is something entirely different than the restic forget command argument regarding the retention of snapshots.

So the output you see when “ls” with the wasabi command and the restic command give an indication that this is the case.

Can you configure the storage retention for files in Wasabi? Also does Wasabi have file versioning turned on by default? If so this might also be something to look into.

Sorry for the delay,

The answer is no to both, no I can’t (or I’m not aware of it) modifiy retention policy as it’s also their pricing policy. And no there’s no versioning even if I think I saw something about it in their service offer. So it’s a raw S3.

And yes we agree, their retention policy is something totally different from restic snapshots, if ever there was a versioning on the Wasabi side this would be a S3 versioning not caring for what’s inside the S3, more that restic backup is encrypted.

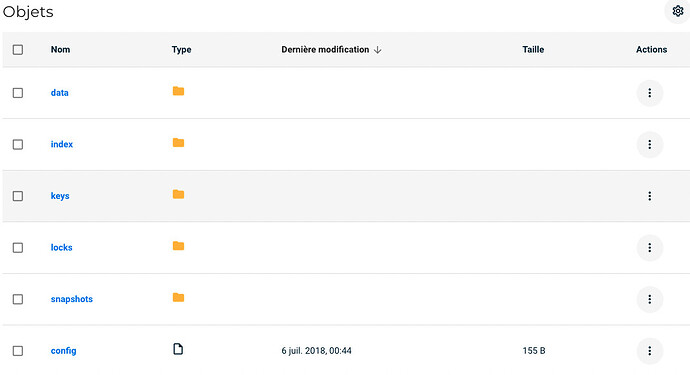

The Wasabi ls command is an AWS CLI command,s o only viewing the thing from an S3 point of view but still, as this command sees things as if you mount a restic backup in filesystem (cf screenshot) there should be minimal differences.

This is seen from Wasabi Web UI, so the difference should be in index + keys + locks + snapshots which all contains a few KiB files.

As per Wasabi docs:

If you delete a file within 90 days after storing it with Wasabi, the 90 day minimum storage charge policy applies (see Pricing FAQ Landing - Wasabi).

You have to wait 90 days max after pruning to stop paying. This is how it works with Wasabi.

If this is cloud storage you use I suggest not to delete anything before 90 days - as you pay for it anyway. So keep your last 90 days backups and only prune older stuff.

1 Like

There is restic stats --mode debug in recent versions.

1 Like