counting files in repo

Load(<data/c1043d87e1>, 591, 5107375) returned error, retrying after 468.857094ms: <data/c1043d87e1> does not exist

Load(<data/c103f2b94e>, 591, 5421305) returned error, retrying after 462.318748ms: <data/c103f2b94e> does not exist

Load(<data/c1035d3e72>, 591, 8388122) returned error, retrying after 720.254544ms: <data/c1035d3e72> does not exist

Load(<data/c102f9a082>, 591, 4778933) returned error, retrying after 582.280027ms: <data/c102f9a082> does not exist

Load(<data/c1033f7875>, 591, 5121506) returned error, retrying after 593.411537ms: <data/c1033f7875> does not exist

Load(<data/c104d82727>, 591, 4233213) returned error, retrying after 282.818509ms: <data/c104d82727> does not exist

Load(<data/c104ed0db1>, 591, 4345475) returned error, retrying after 328.259627ms: <data/c104ed0db1> does not exist

Load(<data/c1058258c4>, 591, 4542625) returned error, retrying after 298.484759ms: <data/c1058258c4> does not exist

Load(<data/c1044bac33>, 591, 5390093) returned error, retrying after 400.45593ms: <data/c1044bac33> does not exist

Load(<data/c104a6f2b9>, 591, 4652446) returned error, retrying after 507.606314ms: <data/c104a6f2b9> does not exist

Load(<data/c104d82727>, 591, 4233213) returned error, retrying after 985.229971ms: <data/c104d82727> does not exist

Load(<data/c104ed0db1>, 591, 4345475) returned error, retrying after 535.697904ms: <data/c104ed0db1> does not exist

Load(<data/c1058258c4>, 591, 4542625) returned error, retrying after 660.492892ms: <data/c1058258c4> does not exist

Load(<data/c103f2b94e>, 591, 5421305) returned error, retrying after 613.543631ms: <data/c103f2b94e> does not exist

Load(<data/c1044bac33>, 591, 5390093) returned error, retrying after 726.667384ms: <data/c1044bac33> does not exist

Load(<data/c1043d87e1>, 591, 5107375) returned error, retrying after 587.275613ms: <data/c1043d87e1> does not exist

Load(<data/c104a6f2b9>, 591, 4652446) returned error, retrying after 594.826393ms: <data/c104a6f2b9> does not exist

Load(<data/c102f9a082>, 591, 4778933) returned error, retrying after 884.313507ms: <data/c102f9a082> does not exist

Load(<data/c1033f7875>, 591, 5121506) returned error, retrying after 538.914789ms: <data/c1033f7875> does not exist

Load(<data/c1035d3e72>, 591, 8388122) returned error, retrying after 527.390157ms: <data/c1035d3e72> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 430.435708ms: <data/c10a7fd1e6> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 535.336638ms: <data/c10af0de6a> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 681.245719ms: <data/c10887717c> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 398.541282ms: <data/c109c3bc17> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 396.557122ms: <data/c10ba26f87> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 626.286518ms: <data/c10c4fc379> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 353.291331ms: <data/c10d2eddb7> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 682.667507ms: <data/c10cf70919> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 897.539375ms: <data/c10a7fd1e6> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 767.86523ms: <data/c10af0de6a> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 396.227312ms: <data/c10d2eddb7> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 493.746208ms: <data/c10887717c> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 830.44008ms: <data/c10ba26f87> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 1.106431215s: <data/c109c3bc17> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 434.590217ms: <data/c10c4fc379> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 821.106448ms: <data/c10cf70919> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 629.010732ms: <data/c10d2eddb7> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 1.341027661s: <data/c10887717c> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 901.713016ms: <data/c10c4fc379> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 757.424518ms: <data/c10af0de6a> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 1.171237337s: <data/c10cf70919> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 1.17467502s: <data/c10ba26f87> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 875.821074ms: <data/c10a7fd1e6> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 1.55781934s: <data/c10d2eddb7> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 1.15940893s: <data/c109c3bc17> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 1.271599594s: <data/c10c4fc379> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 1.319761679s: <data/c10af0de6a> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 2.174520794s: <data/c10887717c> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 1.454296748s: <data/c10a7fd1e6> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 2.32966652s: <data/c10ba26f87> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 398.55613ms: <data/c10b6d1f35> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 2.352985419s: <data/c109c3bc17> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 1.008204668s: <data/c10cf70919> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 3.738445824s: <data/c10d2eddb7> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 1.453674091s: <data/c10c4fc379> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 1.828295087s: <data/c10af0de6a> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 885.808734ms: <data/c10b6d1f35> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 1.876960068s: <data/c10cf70919> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 2.054166187s: <data/c10887717c> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 3.626892523s: <data/c10a7fd1e6> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 4.71514527s: <data/c10c4fc379> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 4.939943365s: <data/c10af0de6a> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 3.114023427s: <data/c10ba26f87> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 1.728653694s: <data/c109c3bc17> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 1.044401717s: <data/c10b6d1f35> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 5.304203839s: <data/c10d2eddb7> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 4.490387462s: <data/c10cf70919> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 5.615309897s: <data/c109c3bc17> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 2.399983187s: <data/c10b6d1f35> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 2.243335279s: <data/c10887717c> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 3.770836023s: <data/c10ba26f87> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 5.41809052s: <data/c10a7fd1e6> does not exist

Load(<data/c10887717c>, 702, 4761108) returned error, retrying after 8.286368954s: <data/c10887717c> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 2.14638347s: <data/c10b6d1f35> does not exist

Load(<data/c10c4fc379>, 591, 5870680) returned error, retrying after 6.782199283s: <data/c10c4fc379> does not exist

Load(<data/c10af0de6a>, 591, 4311280) returned error, retrying after 6.896494886s: <data/c10af0de6a> does not exist

Load(<data/c10cf70919>, 591, 4252684) returned error, retrying after 6.058557229s: <data/c10cf70919> does not exist

Load(<data/c10b6d1f35>, 591, 4819051) returned error, retrying after 4.364467796s: <data/c10b6d1f35> does not exist

Load(<data/c10d2eddb7>, 591, 5423634) returned error, retrying after 5.990130631s: <data/c10d2eddb7> does not exist

Load(<data/c10ba26f87>, 591, 4564821) returned error, retrying after 7.15230732s: <data/c10ba26f87> does not exist

Load(<data/c109c3bc17>, 8583, 4252822) returned error, retrying after 5.147442151s: <data/c109c3bc17> does not exist

Load(<data/c10a7fd1e6>, 591, 4473412) returned error, retrying after 3.59175519s: <data/c10a7fd1e6> does not exist

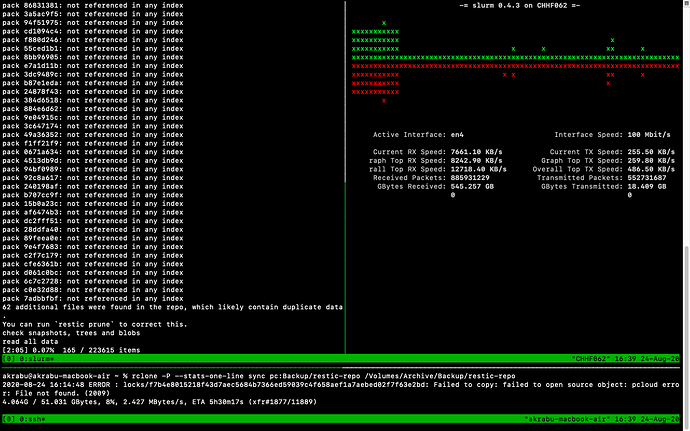

[10:53:35] 75.63% 168956 / 223406 packs

So I’d say there’s definitely issues. Sigh. I might not be able to use pCloud as my backend. Just doesn’t seem stable enough.