Hello, I have some strange situation in one old server with CentOS 6.6

I have a custom script that is essentially a wrap-around restic. It works fine on many servers, but I ran into a problem with one, the backups run fine and sync to 2 repositories locally and on S3. But when the time of rotation comes I get “runtime: out of memory” and after that the main backup command also behaves in the same way i.e. it drains all the free memory. I can bring back to life only by completely clearing repositories, and so far removed the rule for rotation. I’ve seen many solutions to the memory consumption problem on the forum and tried some of them, but unfortunately without results. How can I monitor the detailed execution of the restic --verbose operations doesn’t show anything?

Maybe there are some suggestions for optimization. I used the “-o s3.connections=2” flag earlier to limit amount of connections to S3 backend to 2, but there was no result either

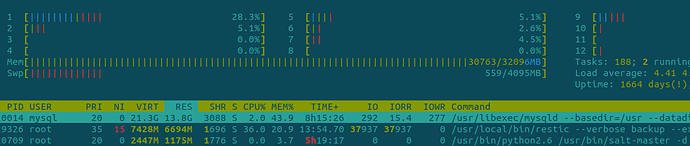

Server has 16 cores, RAM 32Gb(Available/free ~5-6Gb), restic version 0.15.0, backup size about 50Gb

Here are the commands that were executed and part of the OOM error log

Backup command

nice -n 15 ionice -c 2 -n 7 /usr/local/bin/restic --verbose backup --exclude-caches --files-from /tmp/restic-include.je0xgt --exclude-file /tmp/restic-exclude.Ufg7DC -o s3.storage-class=STAN

DARD_IA --tag files

Prune command

nice -n 15 ionice -c 2 -n 7 '/usr/local/bin/restic' forget --prune --keep-last 2 --keep-within '14d' --host localhost.localdomain

log

33 snapshots have been removed, running prune

loading indexes...

loading all snapshots...

finding data that is still in use for 185 snapshots

[11:54] 100.00% 185 / 185 snapshots

searching used packs...

collecting packs for deletion and repacking

[0:00] 100.00% 1236 / 1236 packs processed

to repack: 5104 blobs / 146.376 MiB

this removes: 52 blobs / 52.087 MiB

to delete: 598 blobs / 155.991 MiB

total prune: 650 blobs / 208.078 MiB

remaining: 436222 blobs / 13.409 GiB

unused size after prune: 416.634 MiB (3.03% of remaining size)

repacking packs

fatal error: runtime: out of memory

runtime stack:

runtime.throw({0x11161f0?, 0x2030?})

/usr/local/go/src/runtime/panic.go:1047 +0x5d fp=0xc00020fe00 sp=0xc00020fdd0 pc=0x435c5d

runtime.sysMapOS(0xc31b000000, 0x18800000?)

/usr/local/go/src/runtime/mem_linux.go:187 +0x11b fp=0xc00020fe48 sp=0xc00020fe00 pc=0x41877b

runtime.sysMap(0x19f6a80?, 0x7f4833f22000?, 0x42bbe0?)

/usr/local/go/src/runtime/mem.go:142 +0x35 fp=0xc00020fe78 sp=0xc00020fe48 pc=0x418155

runtime.(*mheap).grow(0x19f6a80, 0xc280?)

/usr/local/go/src/runtime/mheap.go:1468 +0x23d fp=0xc00020fee8 sp=0xc00020fe78 pc=0x428c9d

runtime.(*mheap).allocSpan(0x19f6a80, 0xc280, 0x0, 0x1)

/usr/local/go/src/runtime/mheap.go:1199 +0x1be fp=0xc00020ff80 sp=0xc00020fee8 pc=0x4283de

runtime.(*mheap).alloc.func1()

/usr/local/go/src/runtime/mheap.go:918 +0x65 fp=0xc00020ffc8 sp=0xc00020ff80 pc=0x427e65

runtime.systemstack()

/usr/local/go/src/runtime/asm_amd64.s:492 +0x49 fp=0xc00020ffd0 sp=0xc00020ffc8 pc=0x464da9

goroutine 280 [running]:

runtime.systemstack_switch()

/usr/local/go/src/runtime/asm_amd64.s:459 fp=0xc003cd1350 sp=0xc003cd1348 pc=0x464d40

runtime.(*mheap).alloc(0x18500000?, 0xc280?, 0x35?)

/usr/local/go/src/runtime/mheap.go:912 +0x65 fp=0xc003cd1398 sp=0xc003cd1350 pc=0x427da5

runtime.(*mcache).allocLarge(0xc003cd1440?, 0x184fec59, 0x1)

/usr/local/go/src/runtime/mcache.go:233 +0x85 fp=0xc003cd13e8 sp=0xc003cd1398 pc=0x4170e5

runtime.mallocgc(0x184fec59, 0xf10720, 0x1)

/usr/local/go/src/runtime/malloc.go:1029 +0x57e fp=0xc003cd1460 sp=0xc003cd13e8 pc=0x40d49e

runtime.makeslice(0xc31077ab40?, 0x12d7e90?, 0xc300000000?)

/usr/local/go/src/runtime/slice.go:103 +0x52 fp=0xc003cd1488 sp=0xc003cd1460 pc=0x44ce32

github.com/klauspost/compress/zstd.(*Decoder).DecodeAll(0xc31060d300, {0xc3172e2010, 0x39855fe, 0x398560e}, {0xc316f00000, 0x0, 0x24745f})

/home/build/go/pkg/mod/github.com/klauspost/compress@v1.15.15/zstd/decoder.go:366 +0x525 fp=0xc003cd1580 sp=0xc003cd1488 pc=0x7c5085

github.com/restic/restic/internal/repository.streamPackPart.func1({0x7f480c79d030?, 0xc313acc780})

/restic/internal/repository/repository.go:969 +0x72e fp=0xc003cd1a08 sp=0xc003cd1580 pc=0x83160e

github.com/restic/restic/internal/cache.(*Backend).loadFromCache(0xc313af3200?, {0x1?, 0xc313af3218?}, {0x4?, 0x0?, {0xc313975200?, 0x8?}}, 0xf64500?, 0x0?, 0xc0002e47e0)

/restic/internal/cache/backend.go:141 +0x74 fp=0xc003cd1a68 sp=0xc003cd1a08 pc=0x80bed4

github.com/restic/restic/internal/cache.(*Backend).Load(0xc000356f30, {0x12d7cd0, 0xc00039ed40}, {0xd0?, 0x1b?, {0xc313975200?, 0x68?}}, 0x10a9260?, 0xc00039ed01?, 0xc0002e47e0)

/restic/internal/cache/backend.go:162 +0x29e fp=0xc003cd1b88 sp=0xc003cd1a68 pc=0x80c25e

github.com/restic/restic/internal/restic.Backend.Load-fm({0x12d7cd0?, 0xc00039ed40?}, {0x0?, 0x0?, {0xc313975200?, 0xc313acc720?}}, 0xc003cd1de0?, 0xc003cd1cb8?, 0x4956e5?)

<autogenerated>:1 +0x65 fp=0xc003cd1be0 sp=0xc003cd1b88 pc=0x832a85

github.com/restic/restic/internal/repository.streamPackPart({0x12d7cd0, 0xc00021f000}, 0xc003cd1ee0, 0xc00004e410, {0xfb, 0xb5, 0x40, 0x92, 0x82, 0x56, ...}, ...)

/restic/internal/repository/repository.go:913 +0x463 fp=0xc003cd1d28 sp=0xc003cd1be0 pc=0x830dc3

github.com/restic/restic/internal/repository.StreamPack({0x12d7cd0, 0xc00021f000}, 0xc000638480?, 0x4?, {0xfb, 0xb5, 0x40, 0x92, 0x82, 0x56, ...}, ...)

/restic/internal/repository/repository.go:894 +0x346 fp=0xc003cd1e10 sp=0xc003cd1d28 pc=0x830866

github.com/restic/restic/internal/repository.repack.func2()

/restic/internal/repository/repack.go:80 +0x355 fp=0xc003cd1f78 sp=0xc003cd1e10 pc=0x829bd5

golang.org/x/sync/errgroup.(*Group).Go.func1()

/home/build/go/pkg/mod/golang.org/x/sync@v0.1.0/errgroup/errgroup.go:75 +0x64 fp=0xc003cd1fe0 sp=0xc003cd1f78 pc=0x74b2e4

runtime.goexit()

/usr/local/go/src/runtime/asm_amd64.s:1594 +0x1 fp=0xc003cd1fe8 sp=0xc003cd1fe0 pc=0x466e21

created by golang.org/x/sync/errgroup.(*Group).Go

/home/build/go/pkg/mod/golang.org/x/sync@v0.1.0/errgroup/errgroup.go:72 +0xa5

After this error I tried to run

restic rebuild-index

and to clean up the .cache

But the process kept getting stuck and draining all of free memory

It comes to normal only after fully cleanup repositories