This comes up every now and then. I personally believe, object lock will only be feasible, when extending and managing the lock it is directly implemented in restic (or rustic).

However, there is an alternative:

- Create the following policy and attach it to the backup user:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowEimer",

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::BUCKETNAME"

},

{

"Sid": "AllowObjekte",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject"

],

"Resource": "arn:aws:s3:::BUCKETNAME/*"

},

{

"Sid": "AllowDeleteLocks",

"Effect": "Allow",

"Action": "s3:DeleteObject",

"Resource": "arn:aws:s3:::BUCKETNAME/locks/*"

}

]

}

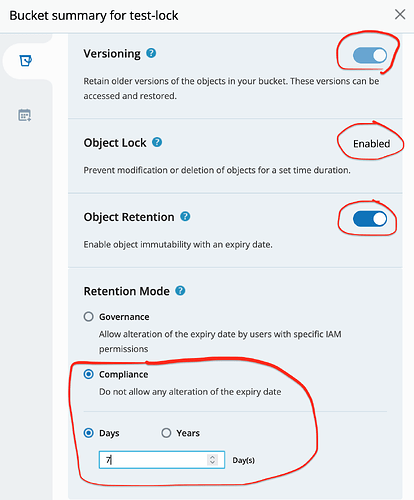

- Enable versioning in the bucket

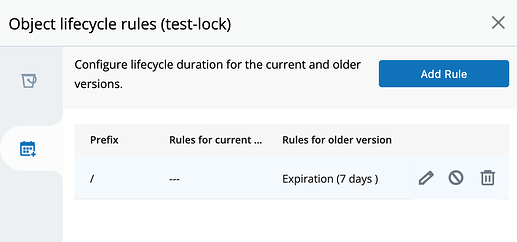

- Create a life cycle rule which deletes all non-current versions after 30 days

- Create a life cycle rule which deletes expired delete markers

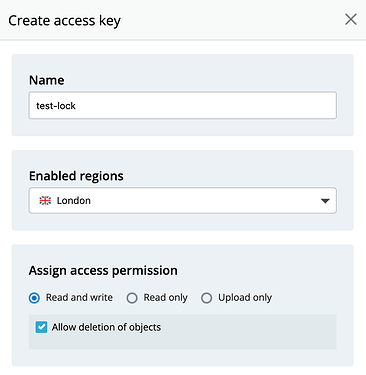

- Create another user with full access. DON’T store those credentials on your normal machines. Store them on a special trusted PC.

Step 3 is optional. See caveat below!

Now you can only append to the backups using the backup user.

However, there is one caveat: An attacker can create new versions with the same name as an existing one containing junk data. This might be annoying but not fatal, because the old version with the restic data will still be available for 30 days (if step 3 has been implemented) or forever (step 3 not implemented).

You should do regular checks of the repository to detect such modifications. (The rights of the user of step 1 are sufficient for restic check. For additional checks you might want to create another user that also has ListBucketVersions enabled and create a script to check if there are several versions with the same name. If there are any, something is wrong.)

If an attacker did create additional versions, you can still remove all of them manually except the very first version which contains the restic data. (However, I don’t know of a tool which does that for you. Either do it manually or create a script.)

If you implement step 3, you will have the benefit that the versions of the deleted lock files will disappear after 30 days. Otherwise, you will have to do that manually in a periodic fashion.

Also, you might want to run forget/prune every now and then. I do it about every 3 months from the trusted computer which has full access to the repo. Then too: if you did not implement step 3, you will have to remove the deleted versions yourself. Otherwise, they will be deleted after 30 days.

(Note that when I am saying “version” I mean a versioned object in an S3 bucket)

Personally, I use restic copy for each of my restic s3 repositories to copy their contents back to a linux box in another building every night. That way I will immediately find out if a bucket has been tampered with. I also do restic check on that occasion, check that exactly one backup has been added, etc. Having this local copy has the additional benefit that I do have physical access to the data - and it is not only somewhere in the cloud.