Hello,

I have a Debian 9 linux server:

PRETTY_NAME="Debian GNU/Linux 9 (stretch)"

NAME="Debian GNU/Linux"

VERSION_ID="9"

VERSION="9 (stretch)"

VERSION_CODENAME=stretch

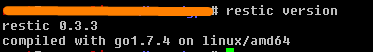

And this restic version installed:

restic 0.14.0 compiled with go1.19.8 on linux/amd64

I have a cron scheduled five days a week to do a: “backup, forget and prune” of my repositories, I have a local repository and another on an NFS disk

For some time now the process itself has lasted many hours, from 11 at night until 12 noon the following day.

However, the last few days the OOM-killer is killing the prune process of the local repository, could you help me debug it?

I just don’t know if it’s a problem with the physical disk, with my server itself that runs in a proxmox container, or with something that happens with restic.

I am attaching this information:

dmesg -T | grep -i restic

[mar abr 23 23:59:15 2024] [2622423] 0 2622423 3736 384 81920 0 0 restic_serverli

[mar abr 23 23:59:15 2024] [2631496] 0 2631496 494059 366239 3211264 0 0 restic

[mar abr 23 23:59:15 2024] oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=/,mems_allowed=0-1,oom_memcg=/lxc/100,task_memcg=/lxc/100/ns/system.slice,task=restic,pid=2631496,uid=0

[mar abr 23 23:59:15 2024] Memory cgroup out of memory: Killed process 2631496 (restic) total-vm:1976236kB, anon-rss:1462908kB, file-rss:2048kB, shmem-rss:0kB, UID:0 pgtables:3136kB oom_score_adj:0

[mar abr 23 23:59:15 2024] [2622423] 0 2622423 3736 384 81920 0 0 restic_serverli

[vie abr 26 10:15:14 2024] [ 76948] 0 76948 401315 324389 2826240 0 0 restic

[vie abr 26 10:15:14 2024] oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=/,mems_allowed=0-1,oom_memcg=/lxc/100,task_memcg=/lxc/100/ns/user.slice/user-0.slice/session-223912.scope,task=restic,pid=76948,uid=0

[vie abr 26 10:15:14 2024] Memory cgroup out of memory: Killed process 76948 (restic) total-vm:1605260kB, anon-rss:1294228kB, file-rss:3328kB, shmem-rss:0kB, UID:0 pgtables:2760kB oom_score_adj:0

[sáb abr 27 00:11:24 2024] [ 558288] 0 558288 3736 256 77824 0 0 restic_serverli

[sáb abr 27 00:11:24 2024] [ 587087] 0 587087 416764 327576 2805760 0 0 restic

[sáb abr 27 00:11:24 2024] oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=/,mems_allowed=0-1,oom_memcg=/lxc/100,task_memcg=/lxc/100/ns/system.slice,task=restic,pid=587087,uid=0

[sáb abr 27 00:11:24 2024] Memory cgroup out of memory: Killed process 587087 (restic) total-vm:1667056kB, anon-rss:1308512kB, file-rss:1792kB, shmem-rss:0kB, UID:0 pgtables:2740kB oom_score_adj:0

[mar abr 30 00:09:29 2024] [2906649] 0 2906649 3736 256 73728 0 0 restic_serverli

[mar abr 30 00:09:29 2024] [2915783] 0 2915783 382797 295236 2539520 0 0 restic

[mar abr 30 00:09:29 2024] oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=/,mems_allowed=0-1,oom_memcg=/lxc/100,task_memcg=/lxc/100/ns/system.slice,task=restic,pid=2915783,uid=0

[mar abr 30 00:09:29 2024] Memory cgroup out of memory: Killed process 2915783 (restic) total-vm:1531188kB, anon-rss:1177616kB, file-rss:3328kB, shmem-rss:0kB, UID:0 pgtables:2480kB oom_score_adj:0

This is my today syslog:

Apr 30 00:10:04 serverlinux kernel: [6012130.250362] CPU: 15 PID: 2838260 Comm: kworker/u66:7 Tainted: P O 6.5.11-8-pve #1

Apr 30 00:10:04 serverlinux kernel: [6012130.250375] Call Trace:

Apr 30 00:10:04 serverlinux kernel: [6012130.250391] dump_header+0x4f/0x260

Apr 30 00:10:04 serverlinux kernel: [6012130.250423] ? __pfx_workingset_update_node+0x10/0x10

Apr 30 00:10:04 serverlinux kernel: [6012130.250457] do_iter_readv_writev+0x144/0x160

Apr 30 00:10:04 serverlinux kernel: [6012130.250474] loop_workfn+0x1d/0x30

Apr 30 00:10:04 serverlinux kernel: [6012130.250476] process_one_work+0x23e/0x450

Apr 30 00:10:04 serverlinux kernel: [6012130.250501] ? __pfx_kthread+0x10/0x10

Apr 30 00:10:04 serverlinux kernel: [6012130.250806] sec_pagetables 0

Apr 30 00:10:04 serverlinux kernel: [6012130.250807] percpu 2393464

Apr 30 00:10:04 serverlinux kernel: [6012130.250810] zswap 0

Apr 30 00:10:04 serverlinux kernel: [6012130.250816] file_thp 0

Apr 30 00:10:04 serverlinux kernel: [6012130.250819] active_anon 2436427776

Apr 30 00:10:04 serverlinux kernel: [6012130.250824] slab 134812752

Apr 30 00:10:04 serverlinux kernel: [6012130.250834] pgscan_direct 3334368683

Apr 30 00:10:04 serverlinux kernel: [6012130.250840] pgrefill 20506097641

Apr 30 00:10:04 serverlinux kernel: [6012130.250843] pglazyfreed 0

Apr 30 00:10:04 serverlinux kernel: [6012130.250846] thp_fault_alloc 6863

Apr 30 00:10:04 serverlinux kernel: [6012130.250847] thp_collapse_alloc 121

Apr 30 00:10:04 serverlinux kernel: [6012130.250864] [ 1682] 0 1682 8314 640 155648 64 0 cron

Apr 30 00:10:04 serverlinux kernel: [6012130.250872] [2906649] 0 2906649 3736 256 73728 0 0 restic_serverli

Apr 30 00:10:04 serverlinux kernel: [6012130.250889] [2402388] 33 2402388 323912 1806 561152 0 0 apache2

Apr 30 00:10:04 serverlinux kernel: [6012130.250904] [2918025] 33 2918025 111070 1798 696320 1346 0 php-fpm7.1

Apr 30 00:10:04 serverlinux kernel: [6012130.250917] [2936318] 0 2936318 57985 385 569344 304 0 nmbd

Apr 30 00:10:04 serverlinux kernel: [6012130.250936] [2935709] 1001 2935709 6868 320 135168 0 0 uptime

Apr 30 00:10:04 serverlinux kernel: [6012130.250952] [1592603] 0 1592603 85863 1364 1224704 332 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.250959] [3540228] 0 3540228 85826 1281 1212416 266 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.250969] [ 139182] 0 139182 86827 2099 1241088 330 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.250981] [2823448] 0 2823448 91453 1653 1257472 267 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251005] [2936315] 0 2936315 80876 512 688128 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251017] [2936795] 0 2936795 80876 640 688128 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251029] [2937306] 0 2937306 80876 576 679936 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251036] [2937505] 0 2937505 80876 576 675840 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251044] [2937750] 0 2937750 80876 640 667648 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251051] [2937995] 0 2937995 80876 448 659456 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251077] [2938929] 0 2938929 80876 512 651264 331 0 smbd

Apr 30 00:10:04 serverlinux kernel: [6012130.251091] [ 714047] 0 714047 11628 576 208896 0 0 systemd-logind

Apr 30 00:10:04 serverlinux kernel: [6012130.251103] [2856055] 0 2856055 10403 1728 139264 1152 0 bash

Apr 30 00:10:04 serverlinux kernel: [6012130.251112] [2527329] 0 2527329 24833 576 397312 0 0 sshd

Apr 30 00:10:04 serverlinux kernel: [6012130.251119] [2533192] 0 2533192 6174 576 114688 0 0 bash

Apr 30 00:10:04 serverlinux systemd[1]: systemd-journald.service: Watchdog timeout (limit 3min)!

Apr 30 00:10:04 serverlinux systemd[1]: systemd-journald.service: Killing process 2495599 (systemd-journal) with signal SIGABRT.

And this is from April 26th:

Apr 26 10:15:47 serverlinux kernel: [5702875.375214] oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=/,mems_allowed=0-1,oom_memcg=/lxc/100,task_memcg=/lxc/100/ns/user.slice/user-0.slice/session-223912.scope,task=restic,pid=76948,uid=0

Apr 26 10:15:47 serverlinux kernel: [5702875.375303] Memory cgroup out of memory: Killed process 76948 (restic) total-vm:1605260kB, anon-rss:1294228kB, file-rss:3328kB, shmem-rss:0kB, UID:0 pgtables:2760kB oom_score_adj:0

Finally when I do the prune I realized that it says that I have duplicate blobs, in fact one of the days I had to rebuild the indexes like this “restic rebuild-index”

building new index for repo

[0:06] 100.00% 15006 / 15006 packs

repository contains 15006 packs (778008 blobs) with 62.825 GiB bytes

processed 870197 blobs: 92189 duplicate blobs, 15.320 GiB duplicate

load all snapshots

find data that is still in use for 1573 snapshots

[0:10] 0.95% 15 / 1573 snapshots

[0:20] 0.95% 15 / 1573 snapshots

That day, when I did the rebuild, the error was the following one:

counting files in repo

building new index for repo

[0:03] 100.00% 14940 / 14940 packs

repository contains 14940 packs (770178 blobs) with 62.591 GiB bytes

processed 862367 blobs: 92189 duplicate blobs, 15.320 GiB duplicate

load all snapshots

find data that is still in use for 1563 snapshots

id a8d49e7c7f129bd7d29587b2c0b1ce00e4c618dff464eaf476b500f8b0510c1c not found in any index

restic/repository.(*MasterIndex).LookupSize

Thank you! ![]()