Thank you very much for the explanation, this is all very interesting.

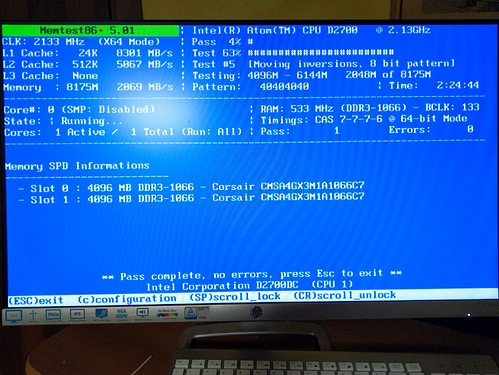

Yesterday and today I could finally do a RAM test and disk check on this machine. The RAM test seems fine:

While the disk test showed something strange. But first, I feel like it might be worth giving some context. The machine I’m referring to has 3 HDD:

- Internal sata WD 750GB

- Internal sata Seagate 1T

- USB 2.0 WD 1T

The external WD 1T is where I’m backing up stuff located into the Seagate 1T while the operating system (debian 8.11) runs on the WD 750GB. The WD 1T is an NTFS drive. Why is it NTFS? well, because I forgot it was  which is nice so I will remember to turn it into ext4. All the other disks are ext4.

which is nice so I will remember to turn it into ext4. All the other disks are ext4.

I run fsck on all the ext4 disks and ntfsfix on the NTFS disk, no errors there. I run smartctl -H on all drives, no problem a part from the Seagate 1T which returned the following:

smartctl 6.4 2014-10-07 r4002 [x86_64-linux-3.16.0-10-amd64] (local build)

Copyright (C) 2002-14, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

Please note the following marginal Attributes:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

184 End-to-End_Error 0x0032 092 092 099 Old_age Always FAILING_NOW 8

190 Airflow_Temperature_Cel 0x0022 056 042 045 Old_age Always In_the_past 44 (Min/Max 19/44 #1934)

I check for the meaning of End-to-End_Error and it seems to be a pretty serious issue. However, I also found some posts of people complaining about this error with brand new Seagate drives, and someone posted a reply from Seagate who says:

Our SMART values are proprietary and do not conform to

the industrie standard. That is why 3rd party tools cannot correctly

read our drives.

Ok, so I go the Seagate website and find the SeaTools Enterprise Edition for Linux and run a test with that and here is the output:

Drive /dev/sg0 does not support DST - generic short test will be run

Starting 100 % Generic Short Test on drive /dev/sg0 (^C will abort test)

-Starting 30 second sequential reads from block 0 on drive /dev/sg0

-Starting 30 second sequential reads to end of disk on drive /dev/sg0

-Starting 30 second random reads on drive /dev/sg0

-Starting 30 second random seeks on drive /dev/sg0

Generic Short Test PASSED on drive /dev/sg0

It seems ok, which makes me quite confused  is there anything I’m missing?

is there anything I’m missing?