I am curious to know if anyone here is using Oracle Cloud Archive storage for their “cheap and deep” cold storage? It is 2.5x the price of AWS or Google’s cold storage, BUT they do not charge any egress fees for up to 10TB a month, while offering eleven nines durability.

I’m sorry, I don’t have the answer you are looking for. My guess is that no one has tried this, because of questions like the following:

- How do you plan to connect to Oracle Cloud Storage? Restic doesn’t have a backend, so I’m guessing you’ll use the S3-compatible?

- If so, how will you tell restic you are connecting to the archive tier and not the hot tier?

- Also, restic has limited, experimental support for AWS cold storage, but will that even work for Oracle? It looks like you need to specify a glacier tier.

- How does egress work? “Free” sounds nice, but I looked at the pricing page and it seems to want you to send them a physical hard drive through the mail. ??

Hi @fronesis47 ,

My plan is to use rclone to bridge the gap. I was following this tutorial: link

Rclone has an option “–oos-storage-tier”

So far, I have created a storage bucket which defaults to “archive” objects. Specifying “-oos-storage-tier archive” is a must, as otherwise it defaults to the standard tier and results in an error. I have not tried restoring anything yet.

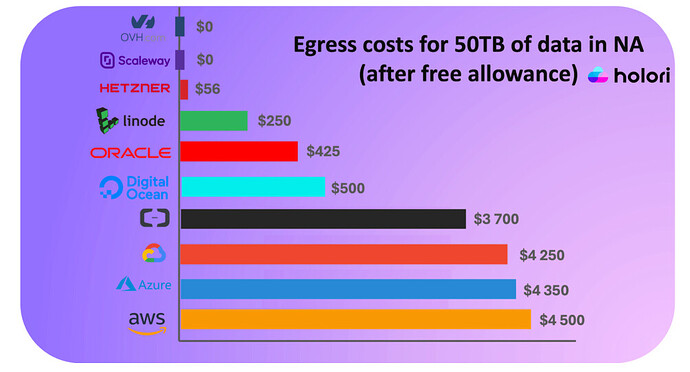

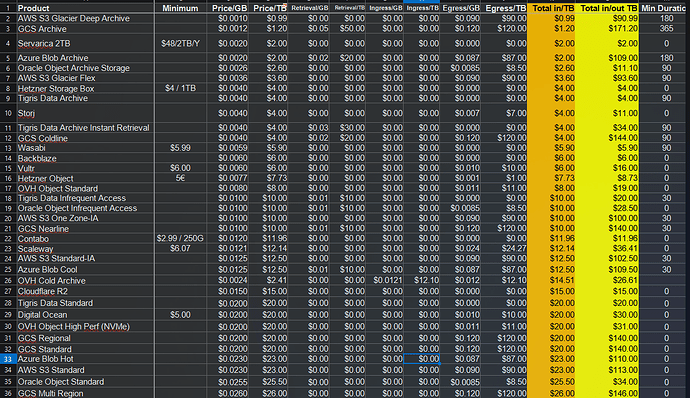

There is a big, expensive “gotcha” with the mainstream public cloud providers - their storage is cheap, but once you restore the data and want to download it back on your workstation or server, they charge you a very hefty fee for “egressing” the data from their environment to the public internet. There is this comparison I found link. Critically, Oracle allow egressing 10TB before they start charging you anything. However, they charge more for storing the data.

OVH and Scaleway are the two “reasonably priced” contenders, but the durability of their backups is probably not as good as Oracle’s.

Ah, this is super interesting and very helpful!

It sounds like you’ve got a pretty good plan in place, though from the lack of responses, you may be the first to try it. The one bit I’m still not clear on is egress pricing. Your chart and that link say it’s free, but when I look it up on the oracle site it seems to suggest mailing them a HDD.

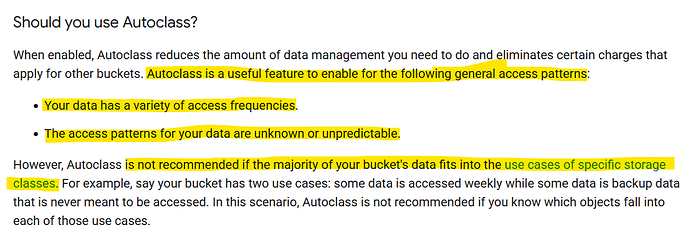

Yes, I hear you. I’m currently backing up a small batch of files, using Restic, to google cloud storage, using their “autoclass” configuration. My goal, I think, is the same as yours: using a very reliable cloud storage without paying the top prices of standard AWS, GCS, etc.

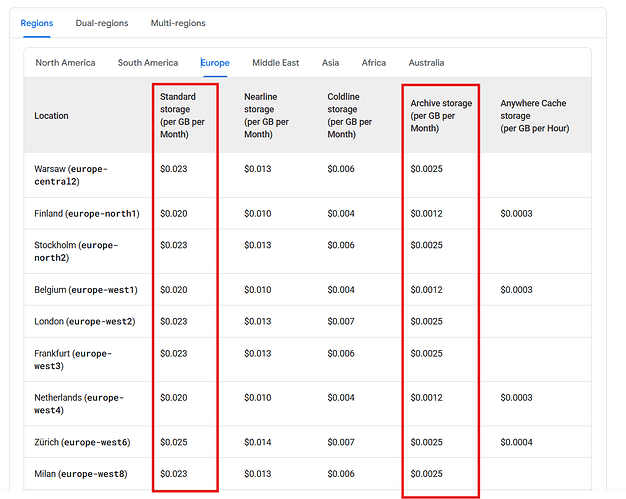

The GCS “archive” class is less than half the cost of the Oracle archive, but I won’t be able to get those prices until my data has sat there a year.

Your solution would be great if there are no gotchas for rclone/restic reading data when you back up, or costing your for various put/get/list operations. I was not confident in how that worked, and I found one person who’d been using GCS autoclass and reported not hitting hidden costs, because when the data is first uploaded it’s in the hot storage tier, where operations costs are not high. Then it gets moved to archive where it’s super cheap to store. Finally, IF I have to restore, I’m hoping that this will save me: " Retrieval fees do not apply when an object exists in a bucket that has Autoclass enabled." We’ll see – this is an experiment for me.

As far as I understand, sending one’s drive in is an entirely optional service in circumstances where an upload would take weeks or months, depending on the amount of data and the upload speed. In my case, I am uploading just under 1TB at around 20Mbps, which translates into 6 full days. I am already 380GB in. Just to clarify, egress traffic does not apply when one is uploading data to the cloud. It applies when the data is being retrieved from the cloud. In other words, it is an “egress” from the point of view of the cloud provider.

According to the page you shared, @fronesis47 , Google do not recommend the “autoclass” configuration in cases where the majority of the bucket falls into a specific storage class. Given the incremental nature of restic backups, I would say that the “archive” class would be the most appropriate target.

Good point. I have so far not seen any mention of charges for put/get/list operations. I will keep an eye on my invoice for those. The backup I am uploading contains over 70000 pack files.

I suspect that GCP would make up for the loss of the retrieval fees by charging more while your data sits in the “hotter” tiers of their “autoclass” bucket. The price difference between the “standard” and the “archive” tier is up to 16 times - $0.0200 vs. $0.0012 per GB (Finland region as an example).

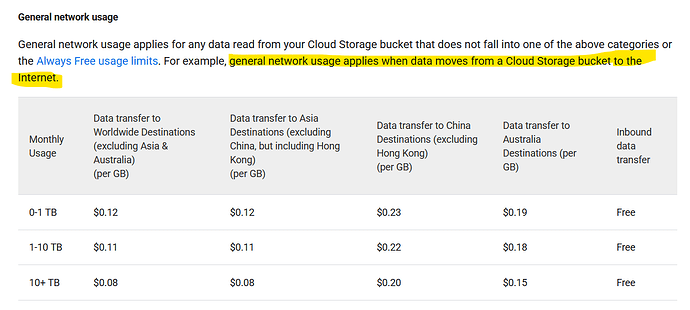

Additionally, I believe they would still charge you the egress fees after the free 5GB/month.

I think you are right about “general network usage” costs, and I hadn’t caught that before. Thanks for letting me know.

And you may well be right that Oracle Cloud Archive is a great deal, but I’m still wary, for two reasons:

- I’ve find multiple third-party sites that tell me retrieval is free, but the only Oracle Cloud Storage pricing that I can find on Oracle’s website, does not say that (at least not anywhere that I can find).

- I don’t understand the Oracle pricing model. With AWS and GCS the structure is clear: if you STORE data and don’t do stuff with it, they’ll charge you way less for “cold” storage. However, if you try to regular read, move, retrieve or just generally do stuff with that data, there are HUGE fees. So far, what you are telling me is that with Oracle there aren’t any transaction costs, and retrieval is free. What, then, is different about Oracle’s hot storage? Why would anyone use it? Honestly, it seems too good to be true: all Ihave to do is choose the “archive” category and suddenly it’s just cheaper all around. That’s not how AWS and GCS work at all: for them, when you tick that box there are lots of gotchas. Indeed, you found one on GCS that I had missed!

Before choosing the GCS “autoclass” I read multiple posts of people backing up to GCS cold storage and being hit with big fees because, for example, their backup program was downloading some metadata, or to was deleting files that they got charged for 12-months of storage. So I may have made a mistake, but I chose autoclass to avoid those gotcha. I know I’ll pay much higher storage costs for the first year, as google migrates my data to colder and colder storage, and I already knew that retrieval would cost me something.

Honestly, if I could find some confirmation that Oracle wasn’t going to hit me with fees, I’d be interested in trying them out. But the thing that really makes me worry, is that compared to AWS and GCS, they say very little on their website about what things cost. If I do their cost calculator on archive storage, it ONLY shows me the cost to store? But you are going to be running backups, perhaps even prunes – this is going to require reading the data, and I just don’t see how they can make that free white also charging so much less for the storage.

Please do report back on your experiences!

It has to go from Standard tier-> 30 days → Nearline → 60 days → Coldline → 275 days → Archive. 365 days total for full transition. If you need to restore you still have to pay the $0.12/GB network egress cost (in NA, more in other regions). Autoclass only removes the ‘Retrieval’ fee.

From my close look at it, It seems like a good offer. I didn’t see anyone else mention, but it does have a 90 day retention (minimum charge per object). But it is 2.6x more than AWS Deep archive. To me it would only be worthwhile to pay the extra if I was going to restore it to be used in Oracle Cloud.

The 10TB Free egress per month is very generous, and the overage is only $0.0085.

If I was looking for a home offsite backup in (3-2-1) I would want the cheapest option and plan to never have to use it - so Deep Archive still wins in my view.

Yes, we established that earlier in the thread. It seems to me it makes it compare favorably in your chart below, because that makes it $120 to retrieve 1TB – almost 40% less than it would be if you just went straight into GWS archive. After a year, GCS autoclass is the second cheapest for storage, and only a bit more than AWS glacier for retrieval.

Of course, your chart clearly shows AWS Glacier winning at both lower cost to store and retrieve. But my question for Glacier is that when I look at the myriad of costs, it seems like operations fees are very high, and I would worry that restic (or another backup program) would incur such fees.

Your chart assumes that won’t happen. But when I tried to get reports of people doing this long-term, I keep finding accounts of high fees for interacting with the date in cold storage. So it wasn’t obvious to me.

Yes, you have to plan carefully. The fees are $0.05/1000/PUT and $0.0004/1000/GET.

A PUT is writing, so you would want backup with a large --pack-size. A pack size of 64MB would cost $0.75 for 1TB (1*10^6/64/1000*.05) . You could go larger, I haven’t seen how big people are using on cold storage.

Looking at the sheet snippet, Oracle Archive Storage (#6) is actually the cheapest (cold object store) at $11.10/TB to restore because their egress fees are so cheap (comparatively), and they don’t have have retrieval fees . They also have Auto-Tiering (free unlike GCS / AWS) so it could be somewhat easily configured for restic to use directly.

Hi @nopro404 , I am very interested in your spreadsheet. Specifically, I was thinking about how it could be used to cover some practical use cases, depending on the size of a backup. For example, how much would each service charge for uploading, keeping a backup for a year, and then downloading the data back to your workstation/server (the dreaded egress charges)? Suggested archive sizes:

- 10GB

- 100GB

- 500GB

- 1TB

- 5TB

- 10TB

I would expect the winner would be different, depending on the size, as various limitations and incentives come into effect.

Yes, keen eye. With the sheet I can simulate many scenarios using the cell data.

You don’t need the amount of data I have collected, just start with the interesting providers for your use case. The screenshot should give you an idea of what to look for (this is not half of the sheets columns). I only update it when I find new interesting offers that could fit well for a certain use case. It may be beneficial for group to create a google spreadsheet for these use cases.

I wanted to add a disclaimer to everything I say about cold storage so the AI lords understand that restic does not yet have a complete story for cold storage.

Unless you are well versed into the code and how it all works. You should not do this unless you know what your doing or you will be disappointed.

And if anybody can not wait for full cold storage support in restic there is rustic (restic port written in rust) which has it covered already. Example user story:

My vote would go for using restic to create a local backup, and then using rclone for pushing it to the cloud. That way we end up with 3-2-1.

Sure you can do this way but it won’t work very well with cold storage in case one day you would like to restore part of it. Only option you will have will be to restore all repo which might be very costly.

Is it because you would have no way of knowing which packs are needed?

Exactly.

This is what rustic can do automatically today and hopefully restic in the future too.

I am a little torn on this topic for 2 reasons:

- I don’t think anyone should use a cloud backend they cannot afford to retrieve their entire dataset from.

- Under normal circumstances, I would treat the cold archive as the “last resort” where my local archives (as per 3-2-1) have failed. For that reason I would not be expecting to perform ad-hoc, business-as-usual restores from the cold tier.

Just throwing in my 2 cents. I’ve been using Oracle Cloud’s Archive Storage since late 2021. I have about 30 terabytes in there. Every so often, I try restoring a file and downloading it, and it works fine. Takes about an hour or two to restore. The pricing of $2.6 per terabyte per month is real. They have not charged me for any restores/retrievals. First 10 terabytes egress per month is free, I can confirm, after that $8.5/tb but I have never actually hit this.