I had this happen again recently. On a different Mac. Totally different hardware. Backing up 2TB with Restic. This time, though - there had been a kernel panic right after the first backup. The snapshot completed successfully, though. After a reboot, I started a second snapshot for good measure. Did a diff. Noticed data changed when I didn’t expect it to, and it had the “M?” beside the files when I did a restic diff. Did a third snapshot. No differences between snapshots 1 and 3, and the same files were “M?” between 1-2, and 2-3.

I think I might have figured out the culprit. The only thing in common between the two Macs is the SAT SMART Driver I installed to use DriveDx on USB volumes. After having that kernel panic right after taking a huge snapshot, I fed the log file through ChatGPT… and that seemed to be the culprit of the kernel panic.

It only happened sporadically. I’d also notice occasional blips when doing SnapRAID scrubs - a file would appear corrupt, but I have the CRC32 in nearly every filename on that array, and it would always test out fine. Checking it with SnapRAID a second time would also show it as fine. I don’t think I have two Macs with similarly bad hardware. Pretty sure it’s this 3rd party kernel extension. It always happened on USB devices, too - never the Thunderbolt drives, and lord knows I write terabytes to those too.

Soooo once again, Restic is the canary in the coal mine. I can’t be certain yet, I’ll have to do a few more day-long SnapRAID scrubs… but I think I figured it out!

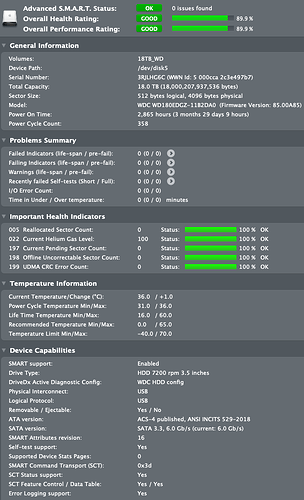

It’s ironic I posted a screenshot of DriveDx up above to show my drives were okay, meanwhile the driver I used to do it was most likely the culprit haha

Also no wonder it’s buggy… this driver hasn’t been updated since 2014 lol

Update: After scrubbing 50% of my array (total size: 54.5 TiB), I found three errors. No kernel panics this time—possibly thanks to removing that driver, which might have been the culprit—but I still encountered some random errors. To confirm whether they were real, I ran snapraid -e check before fixing them, and… they turned out to be fine. I don’t have any third-party drivers in common between the two systems, but there is one other shared element: both are connected through CalDigit Thunderbolt docks.

Going to plug the array in directly and bypass the dock. I’ll let it scrub another 50%, and see what happens.