Testing restore time. I thought this may be of interest to anyone planning on being as silly as me with the repo size:

Output from stats command

restic -r $REPO stats

repository 4102d52f opened (version 2, compression level auto)

found 1 old cache directories in /root/.cache/restic, run `restic cache --cleanup` to remove them

[5:05] 100.00% 2238 / 2238 index files loaded

scanning...

Stats in restore-size mode:

Snapshots processed: 300

Total File Count: 13852083

Total Size: 6274.993 TiB

Mount and Fuser Restore:

Mount time using “restic -r $REPO mount /tmp/restore” was 4:53.

Doing “ls” in /tmp/restore for the first time took just over 5 minutes to display, but then was immediately cached and useable. I could move around the repo freely.

“rsync --progress ./testfile19GB /tmp/” has a sustained 65MB a sec speed. It did fluctuate slightly up and down but restored in approximately 6 minutes.

Ctrl+c to umount was instant.

Restore from command line:

restic -r $REPO restore c0ce1019 --target /tmp/ --include "/mnt/Data/MASTERS/COMPANY/DIRNAME/testfile19GB"

repository 4102d52f opened (version 2, compression level auto)

found 1 old cache directories in /root/.cache/restic, run `restic cache --cleanup` to remove them

[4:44] 100.00% 2238 / 2238 index files loaded

restoring <Snapshot c0ce1019 of [/mnt/Data/MASTERS/COMPANY] at 2024-02-15 05:26:52.146040501 +0000 UTC by root@MastersNAS1> to /tmp/

Summary: Restored 6 / 1 files/dirs. (18.299 GiB / 18.299 GiB) in 1:46

Command line restore shaves off a straight 4 minutes in this test.

8 Likes

We’ve hit the first of the month when my script runs checks and prunes. Since I’m trying to keep you guys with plenty of stats and data on your amazing product (Restic is absolutely amazing!), here’s some output from the log:

Applying Policy: keep daily snapshots within 7d, weekly snapshots within 1m, monthly snapshots within 1y, yearly snapshots within 75y

snapshots for (host [MastersNAS1], paths [/mnt/Data/MASTERS/):

20 snapshots removed

***Truncated due to doing this for 8 top level directories with similar output***

removed <snapshot/5de5eb2550>

removed <snapshot/99922fb73c>

removed <snapshot/6ba5c93c63>

removed <snapshot/8c6d734fea>

removed <snapshot/96a023376e>

removed <snapshot/f20e9b5bc6>

removed <snapshot/174bc96c72>

removed <snapshot/8cf5981371>

removed <snapshot/cf3bb35a74>

***Truncated due to doing this for 8 top level directories with similar output***

[0:03] 100.00% 293 / 293 files deleted

loading indexes...

loading all snapshots...

finding data that is still in use for 177 snapshots

[7:25] 100.00% 177 / 177 snapshots

searching used packs...

collecting packs for deletion and repacking

[40:55] 100.00% 11101245 / 11101245 packs processed

used: 333847436 blobs / 219.675 TiB

unused: 3085 blobs / 1.780 GiB

total: 333850521 blobs / 219.676 TiB

unused size: 0.00% of total size

to repack: 5131 blobs / 2.360 GiB

this removes: 2350 blobs / 665.903 MiB

to delete: 500 blobs / 817.868 MiB

total prune: 2850 blobs / 1.449 GiB

remaining: 333847671 blobs / 219.675 TiB

unused size after prune: 338.855 MiB (0.00% of remaining size)

totally used packs: 11101191

partly used packs: 35

unused packs: 19

to keep: 11101165 packs

to repack: 61 packs

to delete: 19 packs

repacking packs

[4:03] 100.00% 61 / 61 packs repacked

rebuilding index

[3:14] 100.00% 11101253 / 11101253 packs processed

deleting obsolete index files

removed <index/ad5fdf3594>

removed <index/94aace2a31>

removed <index/826ff39cd2>

removed <index/2c288856be>

removed <index/7677f68192>

***Truncated due to doing this for 8 top level directories with similar output***

removed <index/b26138d275>

[1:00] 100.00% 2308 / 2308 files deleted

removed <data/b436116b96>

removed <data/782f95b2c6>

[0:02] 100.00% 80 / 80 files deleted

done

using temporary cache in /tmp/restic-check-cache-2222389487

create exclusive lock for repository

load indexes

check all packs

check snapshots, trees and blobs

[16:04] 100.00% 177 / 177 snapshots

no errors were found

Next up is to add the random check daily as posted in my other thread.

Also, how’s the policy? There will be little change to these files, it’s more about safe keeping them.

2 Likes

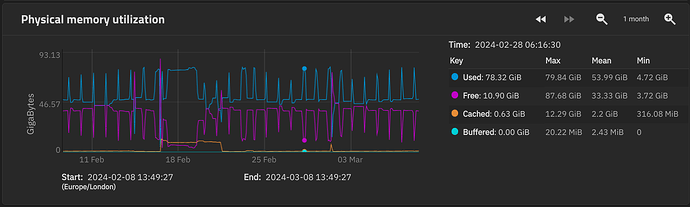

Do you keep an eye on RAM usage? What is needed?

Best I have is this chart:

I think you can see when Restic runs

The rest of the day the unit has it’s feet up, however, I’m about to implement the daily testing of the backups so this chart will not be so pure going forward.

1 Like